So you have been playing with containers for a while now using Docker but once you start having several containers running in many VMs you find it difficult to manage or even remember which container runs on which VM.

VMware answer to this problem is called vSphere Integrated Containers (or VIC).

With VIC your Docker Hosts (the VMs that are running the containers) will not be a blackbox anymore but they will be capable of showing up like VMs in your vCenter server, exposing every property a VM holds.

You can get VIC here: https://vmware.github.io/vic/ but you will need to build it from source.

Otherwise you can download the binaries from Bintray: https://bintray.com/vmware/vic/

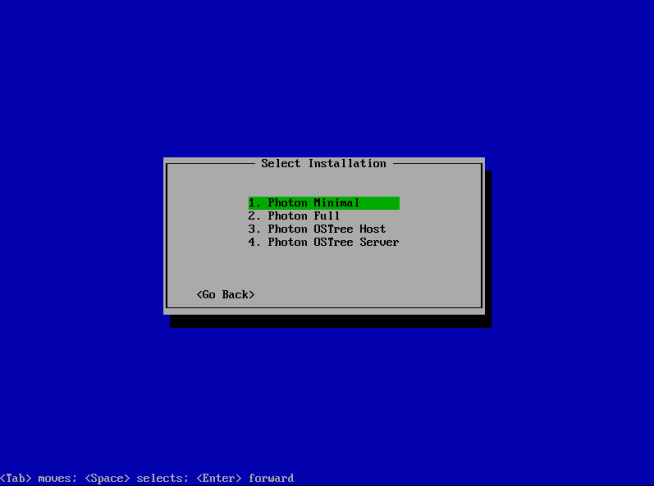

I will use the template I created in a previous post to deploy and manage VIC on my vCenter.

Deploying from template doesn’t seem to work with PhotonOS so the customizations will need to be handled manually, so I just cloned the VM into a new VM with the name of “VIC”.

Let’s customize our PhotonOS:

vi /etc/hostname # edit hostname

cd /etc/systemd/network/

cp 10-dhcp-en.network 10-static-en.network

vi 10-static-en.network # set static ip address as follows

[Match]

Name=eth0

[Network]

Address=192.168.110.11/24

Gateway=192.168.110.254

DNS=192.168.110.10

chmod 644 10-static-en.network

systemctl restart systemd-networkd

tdnf install tar wget -y

wget https://bintray.com/vmware/vic/download_file?file_path=vic_0.8.0.tar.gz

mv download_file\?file_path\=vic_0.8.0.tar.gz vic_0.8.0.tar.gz

tar xzvf vic_0.8.0.tar.gz

Now SSH to each ESXi host that will run VIC and add a firewall rule so that VIC will not get blocked:

vi /etc/vmware/firewall/vch.xml

<!-- Firewall configuration information -->

<ConfigRoot>

<service id='0042'>

<id>VCH</id>

<rule id='0000'>

<direction>outbound</direction>

<protocol>tcp</protocol>

<porttype>dst</porttype>

<port>2377</port>

</rule>

<enabled>true</enabled>

<required>true</required>

</service>

</ConfigRoot>

esxcli network firewall refresh

esxcli network firewall ruleset list

You should be able to see a rule called “VCH” enabled.

Now you have to create a Virtual Distributed PortGroup, I called mine “Docker-Bridge”.

Back on the VIC virtual machine you should change directory to the VIC extracted executables:

cd vic/

./vic-machine-linux create --target administrator@vsphere.local:password@vcenterFQDN/Datacenter --tls-cname vch --image-store vsanDatastore --public-network LAN --bridge-network Docker-Bridge --no-tlsverify --force

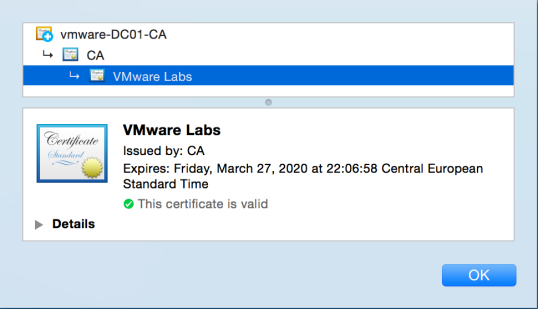

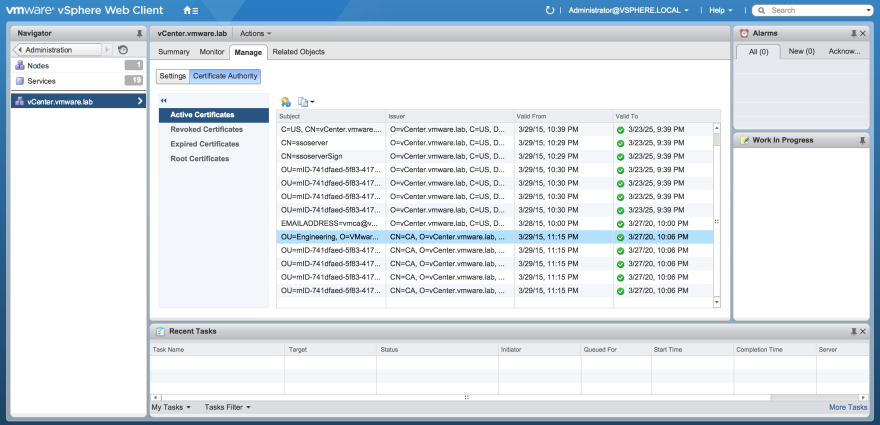

Since I’m using selft signed certificates in my lab I had to work around some certificates checking problems:

–tls-noverify: Disables client side certificates for authentication

–force: Disables check for the destination vCenter, otherwise you would need the certificate thumbprint

You should get an output similar to this:

INFO[2016-12-24T18:27:47Z] ### Installing VCH ####

WARN[2016-12-24T18:27:47Z] Using administrative user for VCH operation - use --ops-user to improve security (see -x for advanced help)

INFO[2016-12-24T18:27:47Z] Loaded server certificate virtual-container-host/server-cert.pem

WARN[2016-12-24T18:27:47Z] Configuring without TLS verify - certificate-based authentication disabled

INFO[2016-12-24T18:27:47Z] Validating supplied configuration

INFO[2016-12-24T18:27:47Z] vDS configuration OK on "Docker-Bridge"

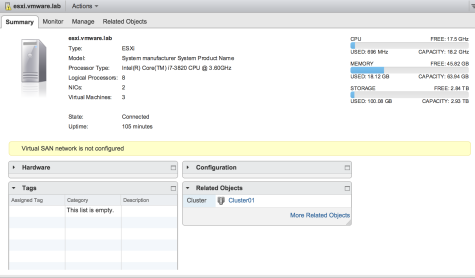

INFO[2016-12-24T18:27:47Z] Firewall status: ENABLED on "/Datacenter/host/Cluster/esxi.vmware.lab"

INFO[2016-12-24T18:27:47Z] Firewall configuration OK on hosts:

INFO[2016-12-24T18:27:47Z] "/Datacenter/host/Cluster/esxi.vmware.lab"

INFO[2016-12-24T18:27:47Z] License check OK on hosts:

INFO[2016-12-24T18:27:47Z] "/Datacenter/host/Cluster/esxi.vmware.lab"

INFO[2016-12-24T18:27:47Z] DRS check OK on:

INFO[2016-12-24T18:27:47Z] "/Datacenter/host/Cluster/Resources"

INFO[2016-12-24T18:27:47Z]

INFO[2016-12-24T18:27:47Z] Creating virtual app "virtual-container-host"

INFO[2016-12-24T18:27:47Z] Creating appliance on target

INFO[2016-12-24T18:27:47Z] Network role "management" is sharing NIC with "client"

INFO[2016-12-24T18:27:47Z] Network role "public" is sharing NIC with "client"

INFO[2016-12-24T18:27:49Z] Uploading images for container

INFO[2016-12-24T18:27:49Z] "bootstrap.iso"

INFO[2016-12-24T18:27:49Z] "appliance.iso"

INFO[2016-12-24T18:27:55Z] Waiting for IP information

INFO[2016-12-24T18:28:06Z] Waiting for major appliance components to launch

INFO[2016-12-24T18:28:06Z] Checking VCH connectivity with vSphere target

INFO[2016-12-24T18:28:06Z] vSphere API Test: https://vcenter.vmware.lab vSphere API target responds as expected

INFO[2016-12-24T18:28:09Z] Initialization of appliance successful

INFO[2016-12-24T18:28:09Z]

INFO[2016-12-24T18:28:09Z] VCH Admin Portal:

INFO[2016-12-24T18:28:09Z] https://192.168.110.57:2378

INFO[2016-12-24T18:28:09Z]

INFO[2016-12-24T18:28:09Z] Published ports can be reached at:

INFO[2016-12-24T18:28:09Z] 192.168.110.57

INFO[2016-12-24T18:28:09Z]

INFO[2016-12-24T18:28:09Z] Docker environment variables:

INFO[2016-12-24T18:28:09Z] DOCKER_HOST=192.168.110.57:2376

INFO[2016-12-24T18:28:09Z]

INFO[2016-12-24T18:28:09Z] Environment saved in virtual-container-host/virtual-container-host.env

INFO[2016-12-24T18:28:09Z]

INFO[2016-12-24T18:28:09Z] Connect to docker:

INFO[2016-12-24T18:28:09Z] docker -H 192.168.110.57:2376 --tls info

INFO[2016-12-24T18:28:09Z] Installer completed successfully

Now you can query the Docker API endpoint, in my case:

docker -H 192.168.110.57:2376 --tls info

If you see this you are good to go:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: v0.8.0-7315-c8ac999

Storage Driver: vSphere Integrated Containers v0.8.0-7315-c8ac999 Backend Engine

VolumeStores:

vSphere Integrated Containers v0.8.0-7315-c8ac999 Backend Engine: RUNNING

VCH mhz limit: 10376 Mhz

VCH memory limit: 49.85 GiB

VMware Product: VMware vCenter Server

VMware OS: linux-x64

VMware OS version: 6.0.0

Plugins:

Volume:

Network: bridge

Swarm:

NodeID:

Is Manager: false

Node Address:

Security Options:

Operating System: linux-x64

OSType: linux-x64

Architecture: x86_64

CPUs: 10376

Total Memory: 49.85 GiB

Name: virtual-container-host

ID: vSphere Integrated Containers

Docker Root Dir:

Debug Mode (client): false

Debug Mode (server): false

Registry: registry-1.docker.io

You might get an error similar to “Error response from daemon: client is newer than server (client API version: 1.24, server API version: 1.23)”, this is because the docker client installed in PhotonOS can be newer than the Docker API endpoint.

You can fix this setting the client version to a previous one:

echo "export DOCKER_API_VERSION=1.23" >> ~/.bash_profile

source ~/.bash_profile

If you try again now you should be good.

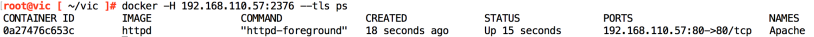

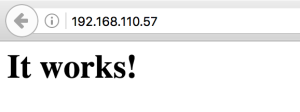

You can run standard Docker commands using the API endpoint we just created, so for example we can run Apache in a container like so:

docker -H 192.168.110.57:2376 --tls run -d --name "Apache" -p 80:80 httpd

docker -H 192.168.110.57:2376 --tls ps

If we try to point a browser to “192.168.110.57”:

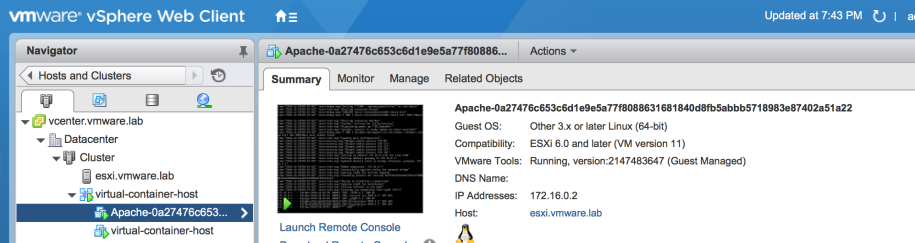

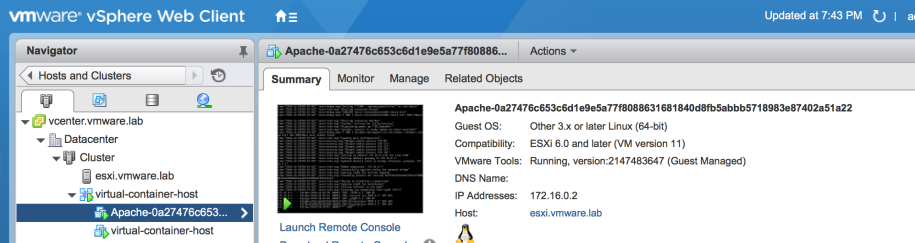

Let’s take a look at vCenter to see what has been created:

You can see the Docker API endpoint represented by the VM called “virtual-container-host” but also the container itself!

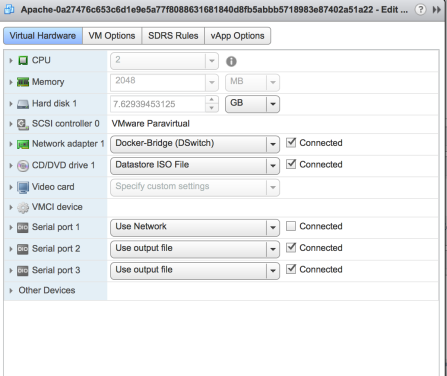

As you can see we have information about what is running, the container ID, the internal container IP Address etc.

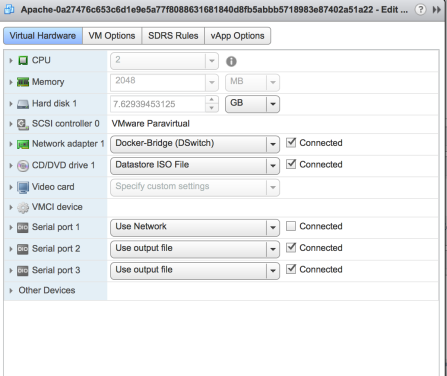

You can even go ahead and edit the virtual hardware like if it was a VM!

Notice how it’s using the vdPortGroup that we created earlier.

To clean up:

docker -H 192.168.110.53:2376 --tls stop Apache

./vic-machine-linux delete --target administrator@vsphere.local:fidelio@vcenter.vmware.lab/Datacenter --force

And this is the coolest way ever to use containers with vSphere!

Note: Just remember that since a vdPortGroup is mandatory this means you need vdSwitch and so you must be running Enterprise Plus edition of vSphere.

Update: VIC is now GA with vSphere 6.5 for all Enterprise Plus users.

Recent Comments